Bruh. This is a Physics subject right?

Umm yeah?

Well. You know... After ten activities in one semester I've been wondering. Where's the Physics?

But $SCIENCE$...

Yeah. You've been saying science this, science that. But I just don't feel like I've seen any Physics stuff.

N-n-n-NANI!?

Well fine. Let's do something Physicsy. Choosy >&↑^%#+}~@%#.

Uck don't you mean PRINCESS. Cuz I'm like super fabulous. Ewl

Back in first year high school, on the second week of classes, my EarthSci (ence) teacher asked us to find the value of the oh so mighty big $G$.

That's the universal gravitational constant you pleb. The meeting after he told us that we should memorize this... $BY \, HEART$. And so I did.

Yeah It's one of those things I'll never forget.

$G = 6.67 \times 10^{-11} N \frac{m^2}{kg^2}$

Big GEE equals six point sixty seven tayms ten to the negative eleven newton meter squared over kilo gram squared.

The following year our Physics 1 teacher happened to be the same person. This time around he made us memorize three equations $by \, heart$.

These are, in his words, "equations according to our friend Isaac." And so right there on my "beautiful notebook" which was checked and graded every quarter, lies three beautiful equations inside red boxes shaded with yellow.

These three equations state

These are the equations for velocity, force, and free fall motion from rest.

I'd show you my 2008 notebook if I my "memories box" did not "inexplicably" disappeared after my brother cleaned the room.

Yes! We are going to do free fall. Ahh what best to relate to Physics than the kinematics of freely falling bodies.

Specifically we will determine the acceleration due to gravity on Earth.

Let's do this!

We took a video of a falling tennis ball.

The ball was dropped from a height of 150cm which lasted for about three seconds. This already included a few bounces. Because as the third beautiful equation suggests, a free falling body only needs about 0.55s to travel 150cm from rest.

The video was supposedly shot at 60 frames per second. I only used the frames from when the ball reached maximum height after the first bounce until it reaches the bottom of the camera view.

I did not use the first falling part because by the time the ball enters the camera range it is too fast that it exists in fewer frames.

Also as Roland Romero so eloquently puts it, the ball near the bottom goes $Bvvffffff$

Dahell is "Bvvffffff"?

Oh you know. A cat goes "meow." A chicken goes "bok bok bok." A cow goes "mooooo."

And 'a comet goes "$Bvvffffff$"' - Romero(2016)

For those who don't understand idiot language it means that the ball is producing after images.

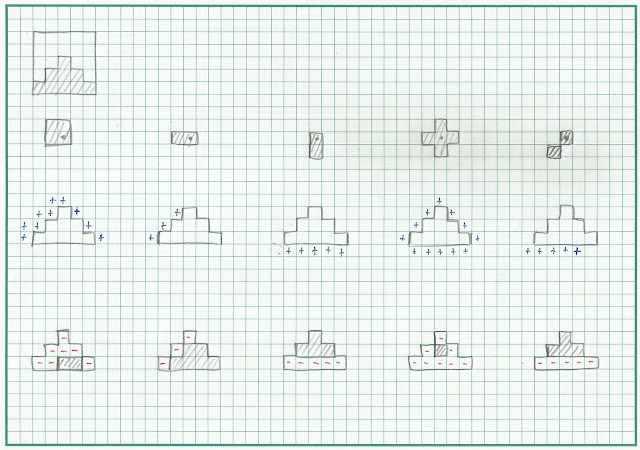

Anyway. To track the motion of the ball, I first segmented the image. Remember Activity 7? Yeah that. Here I used parametric segmentation using gaussian pdf and the whole ball as the region of interest. Somehow I could not get the non-parametric method to work here.

Here's how it looks.

Due to bad lighting and motion blur, the extracted ROI sometimes (more like often) have holes in it.

Now this is not really an issue as long as there's not too much holes, or the holes are radially symmetric with respect to the center of the ball.

Why? Because we will be using Blob Analysis to find the center of the ball.

As long as the calculated centroid is not too far from the center of the ball we are fine.

The red dot signifies the position of the calculated centroid. We can observe that it is indeed not too far off from the center.

Now let's plot the position of that centroid with respect to time

Looks quadratic. It could be right.

How exactly do we get the acceleration?

Well what if we plot $y$ vs $t^2$. Since $y = 1/2 at^2$, the slope of this plot is going to be $a/2$.

The slope of this plot is $381 \rm{px} /324 \rm{frames}^2$ or $a = 2.35 \rm{px} /\rm{frames}^2$

Now lets do some conversions. The white board background has a width of 80cm or 650 pixels. We also know that the video is shot at 60 fps.

Hence: $1 \rm{px} = 0.0012 \rm{m}$ and $1 \rm{frame}^2 = 1/3600 \rm{s}^2 = 0.00028 \rm{s}^2$

So our final measurement for the acceleration due to gravity is $10.07 \rm{m/s^2}$ which has a 2.8% deviation from the accepted value $9.8 \rm{m/s^2}$

Aaaand that's the last activity. Good job me. Good job.

I will give myself one last solid $10/10!$

$GG \, EZ$

I would like to acknowledge Anthony Fox and Roland Romero as my groupmates in the data gathering of this experiment.

Special thanks to Kit Guial for the extraction of frames from the video.

Shout out to my favorite high school teacher who made me want to take a Physics course, Mr. Delfin C. Angeles.

Weird thanks to my blog's second voice. My imaginary fan. Yey.

Uck Puh Lease. Call me PRINCESS. Like ew. This is so embarassing. Oh my Gawsh. Ewl. Like whatever. Se ya... neva.

A very special thanks to Dr. Maricor Soriano for a wonderful semester and for all the lessons I have learned.

:D

- Bear In The Big Blue House - Goodbye Song

Bye Now.